compiler optimizations (1)

For most of the 30 million software developers in the world, the compiler is a black box - just another command to type out in the terminal. Many new programmers would have never even typed out gcc or javac in the terminal and just press the ▶️ button in their IDEs.

Understanding how a compiler works can help you write better code, and develop a fresh outlook on software engineering. Nothing is just magic, it’s just mechanical!

Parsing & Lexing

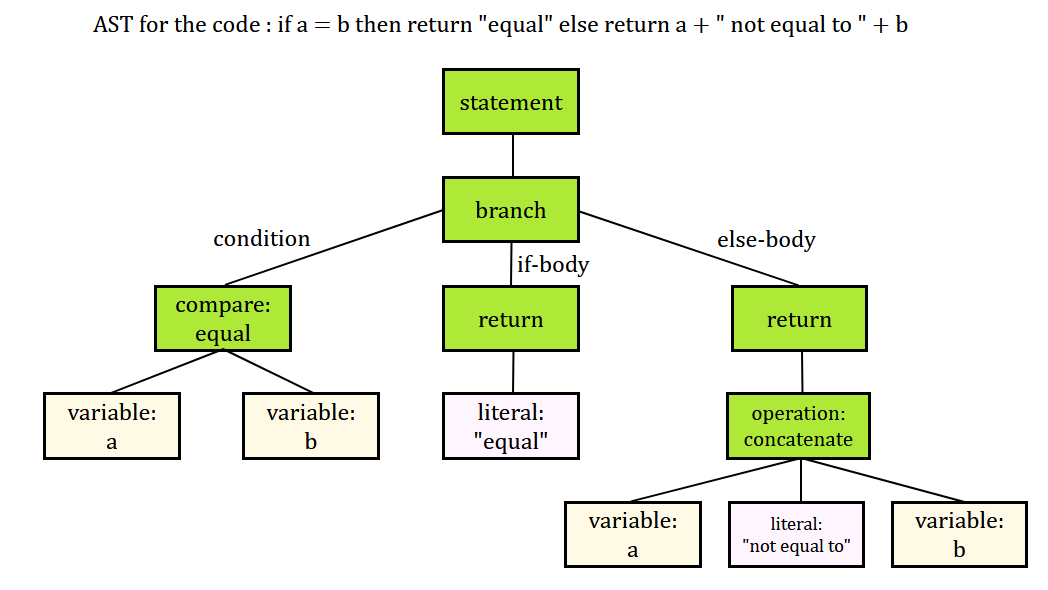

Setting up the parse tree is the boring part of compilation (according to me!), but most intro courses only focus on lexing and parsing. Most of the interesting analysis and transformation on source code happens after the creation of the parse tree and an Abstract Syntax Tree (AST).

Credit: Wikimedia Commons

The parse tree and the AST are the first of many structures that represent source code as a graph. Representing our code as a graph is a really powerful tool that will help us reason about control flow and the state of our program using formal theorems and properties of graphs (more on this later).

This blog will mainly focus on the middle end of the compiler - the part after the AST has been created. Between parsing and the middle end, there can be additional steps such as typechecking and additional semantic analysis depanding on the programming language.

Intermediate Representation

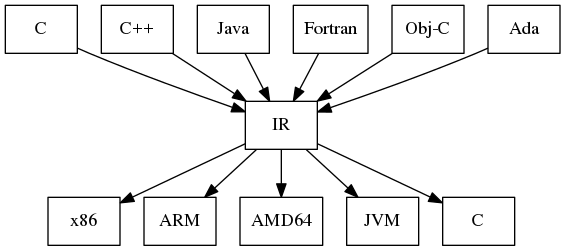

Once the AST has been created, it is usually lowered into an intermediate representation (IR). There are multiple IRs used in a compiler, with each offering different benefits and drawbacks for analysis.

Using an IR allows multiple programming languages to share the same compiler for middle end optimizations and code generation, or combine different front ends and back ends together.

Credit: https://liucs.net/cs664s16/ir.html

IRs simplify code analysis by converting an AST into a linear format, eliminating the need for recursive tree traversal. They may also additional extra information about variable mutation and control flow to help with analysis.

In this post we’ll look at two popular IRs - three address code and control flow graphs.

Three Address Code

IRs in compilers try to keep simple, and do not support complex data structures or control flow like source code does. One of the most popular IRs is three address code, where each instruction is just an operation upon two operands.

z = x op y (General form)

z = x + 3

z = x mod 3

Three address code in real compilers like LLVM will look something like this, with additional information about types:

%z = add i32 %x, 3

Longer expressions such as

z = ((x * y) + (2 * z)) / (1+n)

can easily be translated into three address form by introducing temporaries:

t0 = x * y

t1 = 2 + z

t2 = t0 + t1

t3 = 1 + n

z = t2 / t3

The process of generating three address code from an AST is mechanical and can be done through an AST traversal.

What about branching and conditional flow? By only introducing goto and label statements, we can handle for loops, if statements, etc.

if(x > 10){

y = 10

} else {

y = 5

}

becomes

start:

t0 = x > 10

if t0 == 0: goto else

y = 10

goto exit

else:

y = 5

goto exit

exit:

For loops like:

for(int i = 0; i <= 5; i++){

x = x + 1;

}

y = 10

becomes

i = 0

condition:

t0 = i <= 5

if t0 == 0: goto exit

goto body

body:

x = x + 1

i = i + 1

goto condition

exit:

y = 10

In general, three address code (with a few extensions) is enough to represent most source code structures. Reducing the variety of possible instructions in source code to a handful of instructions in three address code simplifies program analysis and reasoning.

Control Flow Graphs

Control Flow Graphs (CFGs) are the most common form of IR used in program analysis. They are a hybrid form, combining linear code within graphs.

Credit: https://www.cs.toronto.edu/~david

Each node in a CFG is a basic block, a series of instructions without branches in or out (single entry, single exit). Instructions in a basic block can be in three address code, or another representation like SSA form, as long as they are linear.

For example, the following code:

if(x > 10){

y = 10

z = 25

} else{

y = 20

z = 40

}

l = 100

consists of three basic blocks (represented here in SSA form):

BB001:

t0#0 = x#0 > 10

if t0#0 == 0: goto BB002

y#0 = 10

z#0 = 25

goto BB003

BB002:

y#1 = 20

z#1 = 40

goto BB003

BB003:

l#0 = 100

BB003 in the above example is called a join node, a node with more than incoming edge. Join nodes are harder to reason about, as they indicate that the program could have taken multiple different routes to get there.

IRs like SSA form make analysis on join nodes easier to perform by assigning a unique number to each use of a variable, meaning that each variable is only written to once. This allows us to differentiate between the value of y being assigned 10 and the value of y being assigned 20.

Edges in CFGs indicate a change in control flow and can help us reason about the state of a program at a particular point. By using powerful properties of graphs, we can reason about code that can be removed or shifted to a different block to speed up the program. The structure such transformations rely on is called dataflow analysis, which will be the topic of the next few blog posts.

Thank you for reading!

Disclaimer: I am not an expert in compilers, and just want to share some cool ideas I’ve been learning. If I’ve made an error somewhere, let me know.

Enjoy Reading This Article?

Here are some more articles you might like to read next: